Redesign of ShapesXR

When I came to ShapesXR the application was suffering a bloat problem. The team had done an incredible job launching an app in under a year, but the interface outgrew the app's needs. I guided the team through a full redesign of the app, while at the same time adding a few new features so the app wouldn't get stale as the market evolved. We wanted a system that was:

- Familiar to new users

- Flexible across platforms

- Extensible for future growth

- Keeps users in the flow

- Flexible across platforms

- Extensible for future growth

- Keeps users in the flow

We reimagined the visual language, input mapping, manipulation system, information architecture, visuals, and UI layout system.

We launched 2.0 simultaneously on the web and Quest at AWE in June of 2024. It took longer than we wanted to get the new version out, but it was well worth the wait. The new system checks all the boxes. It was FLEXIBLE enough that we could support the Logitech MX Ink stylus with almost zero design or development work. It was FAMILIAR enough to users in our user testing that we felt we could launch without an interactive onboarding system. It was EXTENSIBLE enough that after the redesign was implemented that we were able to ship three new major features in a month (sounds, touch interactions, and procedural primitives).

Accessibility and Flexibility

When designing our new input system we started with how it might work with only hands. We figured if we could get everything working with just hands, then it would work on any platform. Here you can see grabbing the selection tool with the non-dominant hand. The shortcut most people use for this is the control stick.

And it paid off. Here you can see how it works on a device that doesn't have a control stick, the Logitech MX Ink. Now users can change the size of their tools by just grabbing them with the non-dominant hand without having to dig through a settings menu.

Visual Design

When Shapes 1.0 was out we saw applications mimic our UI in their designs. We figured the best way to inspire the industry was to create a beautiful interface that people might mimic again. So we took some extra time to craft something we thought was authentic to the medium, meaning that it used the aspects of the medium in useful ways, rather than mimicking mobile or desktop UI. In stereoscopic mediums, we can use depth and shadow to authentically show focus. We can also use portals to give a sense of volume and interest. We used subtle reflections to add a feeling of physicality and quality to the interface.

We achieved a very minimal UI, that I believe to be authentic to XR.

The response has been positive from customers. Although there is still a lot of work to be done to fix all the little issues.

Generative AI in ShapesXR

I balanced some individual contributions as well as managing the design team. One of the last features I worked on as an IC at ShapesXR was crafting the AI strategy. We can't share much, but I'll discuss the explorations we have shown publicly.

Blockade Labs Integration

When Blockade Labs first came out we wondered if it would be a good fit for ShapesXR. I had the thought that if we could get the backgrounds to project in true 3D depth we could quickly create scenes using generative AI. Using Blender I created a shader that took a depthmap from LeiaPix to deform the spheremap. I got decent results for some types of scenes, as you can see in the video.

When Blockade Labs integrated 3D depth into their product, the quality wasn't where we needed it. So we abandoned this idea. We made sure to remove foreground objects from the prompts so we wouldn't conflict with the 3D objects you placed in space.

The challenge in creating these immersive backgrounds was how to input the prompt. Typing in VR is slow and it's difficult to edit your prompt if you mess up or don't get the desired results. I wondered if I could use ChatGPT to generate the prompt for BlockadeLabs through a back-and-forth conversation.

My first exploration worked quite well. ChatGPT was able to generate the prompts for Blockade Labs with minimal input from me. I simulated the whole experience through a Wizard of Oz approach using a lot of copying and pasting between ChatGPT and ShapesXR.

I then ran an experiment in the ShapesXR Discord where I manually followed the algorithm with our real users, creating spaces for them based on their prompts.

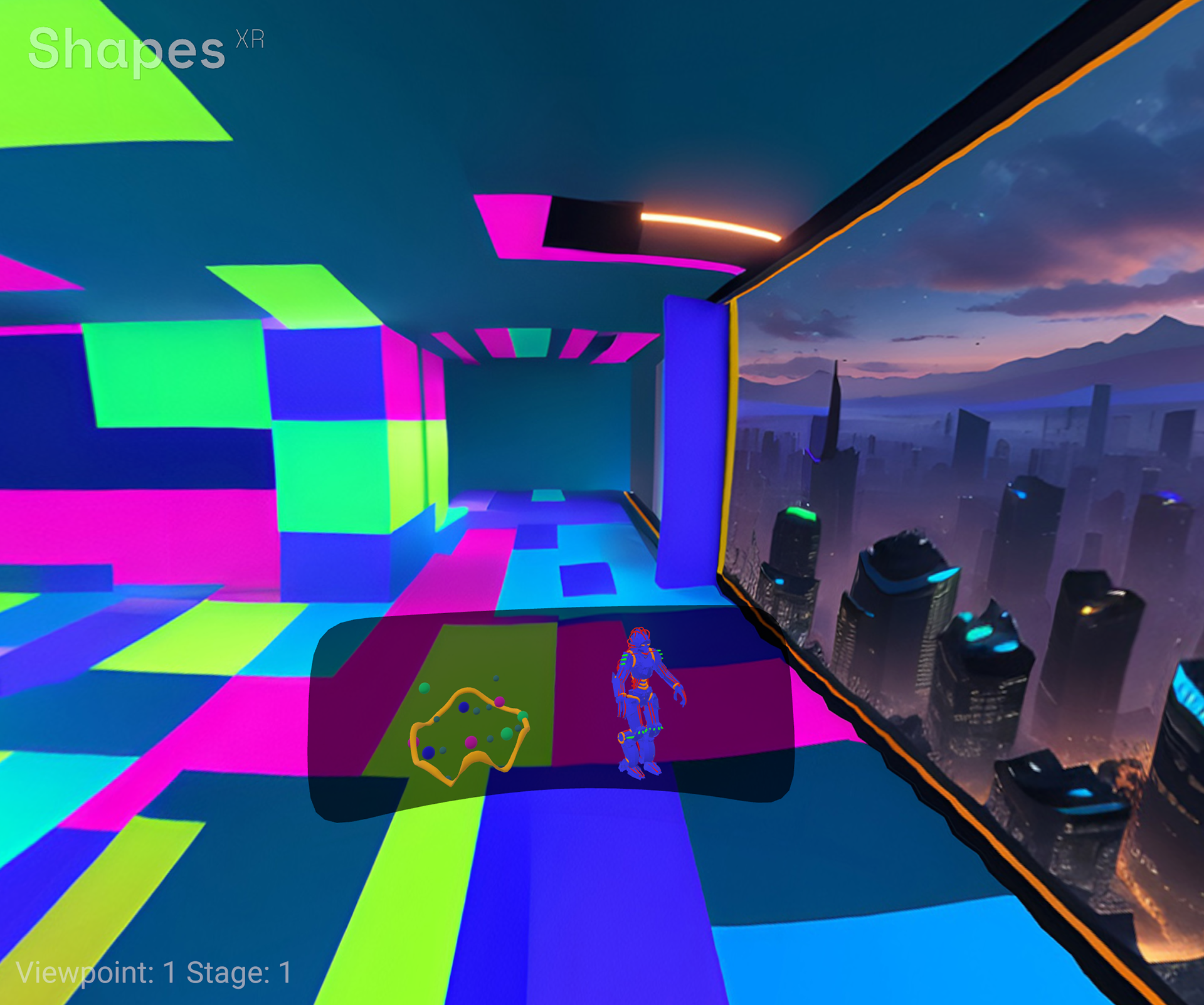

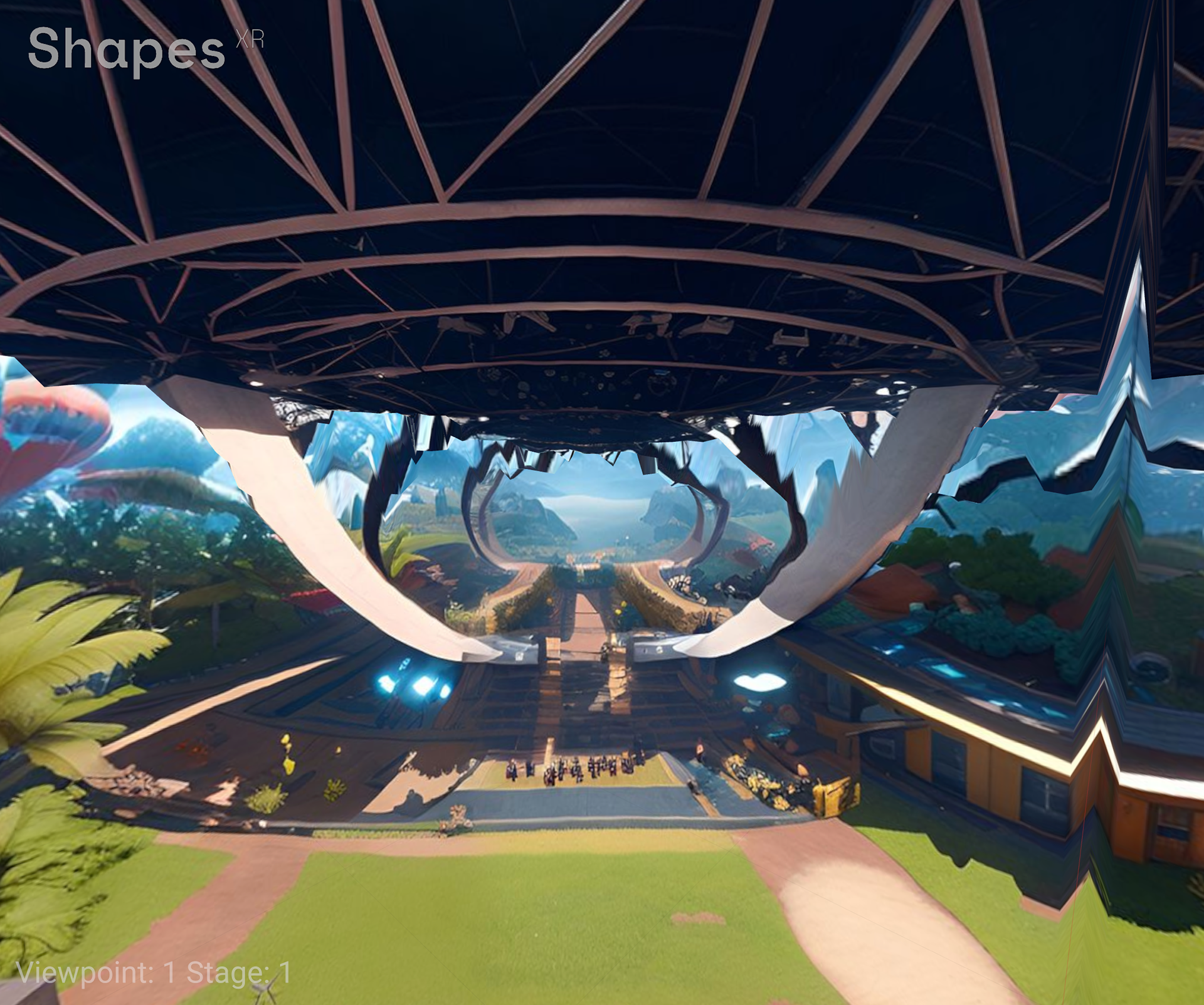

Here are a few screenshots of the user-generated content in ShapesXR using my BrainstormBot prototype.

Head of Design Responsibilities

I was hired at ShapesXR at the start of 2022 as head of design, where I led an incredibly talented design team. Our team is dedicated to creating the best immersive design tool on the market, working closely with our customers to enhance the product’s capabilities, quality, and reliability. My main responsibilities included managing the team and driving the design, requirements, and roadmap for ShapesXR.

How I try to run my teams

Secondary responsibilities included mentoring designers in our community, crafting inspirational examples for our sales team, and contributing to our design blog. Below you can see one of the classes I taught through XR Bootcamp.

Logitech MX Ink Integration

Even though the new design system made it easy to support the Logitech MX Ink Stylus, it did require some design work to address some of the usability issues we saw when we demoed the stylus at AWE 2024 in the Meta booth. I worked on this individually. We had these UX goals:

- Minimizing fatigue

- Avoid button confusion

- Quickly swap between controller and pen

- Support diverse users

- Avoid button confusion

- Quickly swap between controller and pen

- Support diverse users

Here you can see where I landed, as well as some alternative designs.

Following is the announcement video with the ShapesXR content that I contributed.