Magic Leap was a client from 2014 to 2016 when I was working at Artefact. At the time I was leading the interaction design for a team at Artefact of 12 designers, developers, and artists. Visual design master Craig Erikson led the visual design. And Jon Mann led the client relationship. Our feature areas ranged from the operating system, input, toolkit, visual design system, voice system, web browser, media gallery, media streaming service, OOBE, settings, store, and future scenarios. We had a ton of fun riding the wild Unicorn that was Magic Leap.

Finding Unifying Metaphors for Magic Leap One

How do you define something that has never been made before? How do you create a universe that might have different phyiscs than the real world? We wanted to find unifying ideas that would help users quickly understand the interactions inside our new platform. We explored 12 worlds. Then we prototyped our top four world. Finally combining them into a single system: The Magic Leap Universe.

I had the team start by coming up with with metaphors. We brainstormed 12 worlds, that we ranked as a team and with our stakeholders.

We down-selected to four metaphors and visualized the same user flow, but utilizing different mechanics. Here you can see one of the flows I built, exploring portals as a metaphor.

At the same time we were prototyping these concepts in Unity. Our tech artists and game developers created all the assets and interactions here. We found it challenging to iterate our designs in this first year. But we muddle through the process, eventually creating StoryboardVR in order to go faster the following years. The following video shows the prototypes for the the four main metaphors we landed on: Portals, Constellations, Multi-Tool, and Phantascope.

Rony Abovitz, the Magic Leap CEO, gave us very good direction. Combining the best mechanics and metaphors from each world. We took this into the next six months and created a reference prototype for the OS for Magic Leap One.

Patents

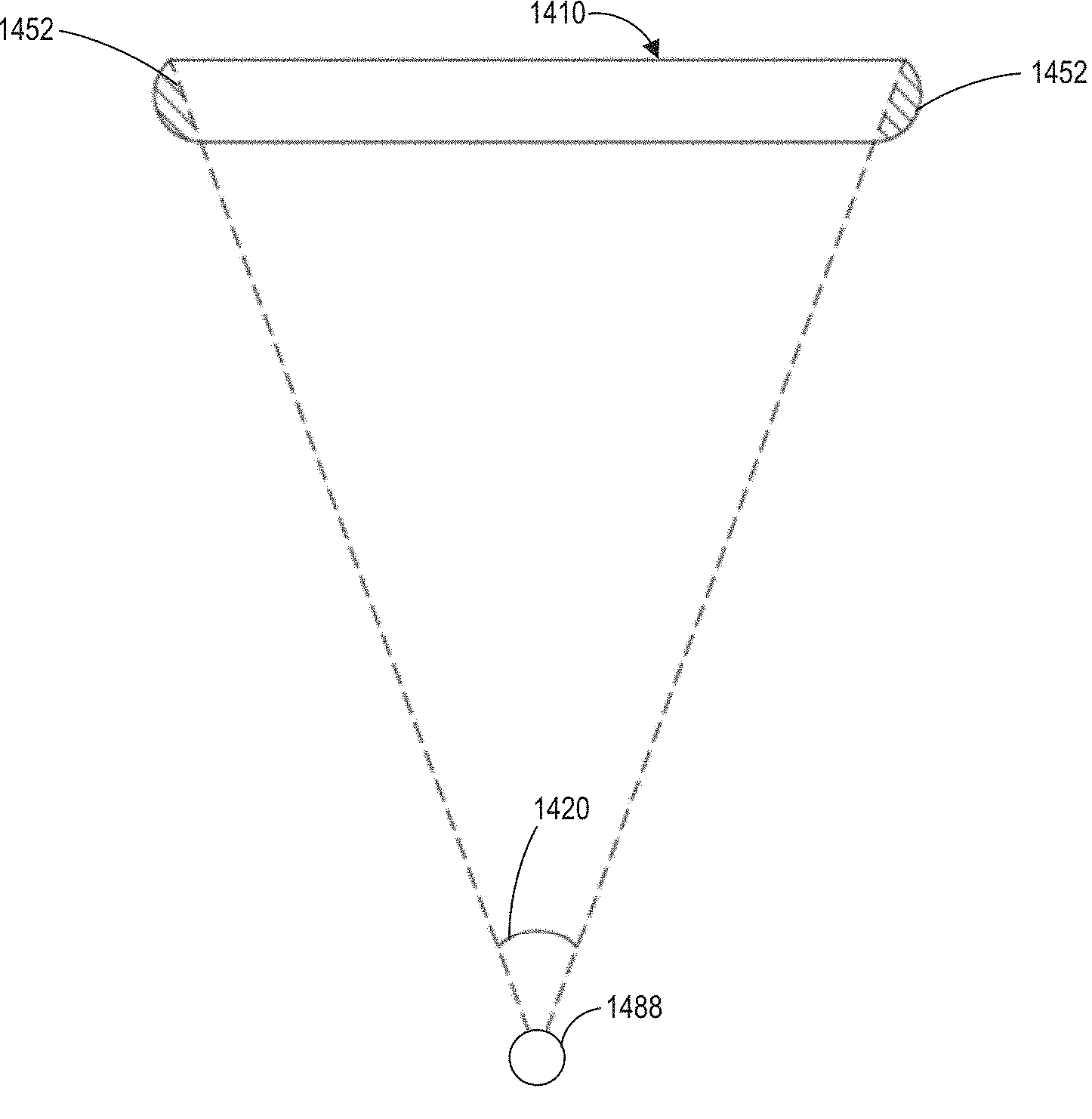

ECLIPSE CURSOR FOR MIXED REALITY DISPLAYS

Patent number: 11367410 - Date of Patent: June 21, 2022

Abstract: Systems and methods for displaying a cursor and a focus indicator associated with real or virtual objects in a virtual, augmented, or mixed reality environment by a wearable display device are disclosed. The system can determine a spatial relationship between a user-movable cursor and a target object within the environment. The system may render a focus indicator (e.g., a halo, shading, or highlighting) around or adjacent objects that are near the cursor. The focus indicator may be emphasized in directions closer to the cursor and deemphasized in directions farther from the cursor. When the cursor overlaps with a target object, the system can render the object in front of the cursor (or not render the cursor at all), so the object is not occluded by the cursor. The cursor and focus indicator can provide the user with positional feedback and help the user navigate among objects in the environment.

Inventors: John Austin Day, Lorena Pazmino, James Cameron Petty, Paul Armistead Hoover, Chris Sorrell, James M. Powderly, Savannah Niles

We were pretty hardcore minimalists on Magic Leap. Our core principle was to enhance the real world. So any pixels we lit up would block the real world. We wanted our buttons to clearly look like buttons, but we also didn't want them to take up any pixels even on hover. I sort of forget how I came up with it, but I proposed what I called the eclipse cursor. A cursor that would move behind the buttons and illuminate a glow like the eclipse of the sun behind the moon. This solved two problems. One, our cursor didn't interfere with the visibility of the icons and text. Two, it still provided continual feedback as the user moved their input behind and around the buttons. It also allowed for the button to stay 100% black, providing maximum contrast for text and icons against cluttered real-world environments.

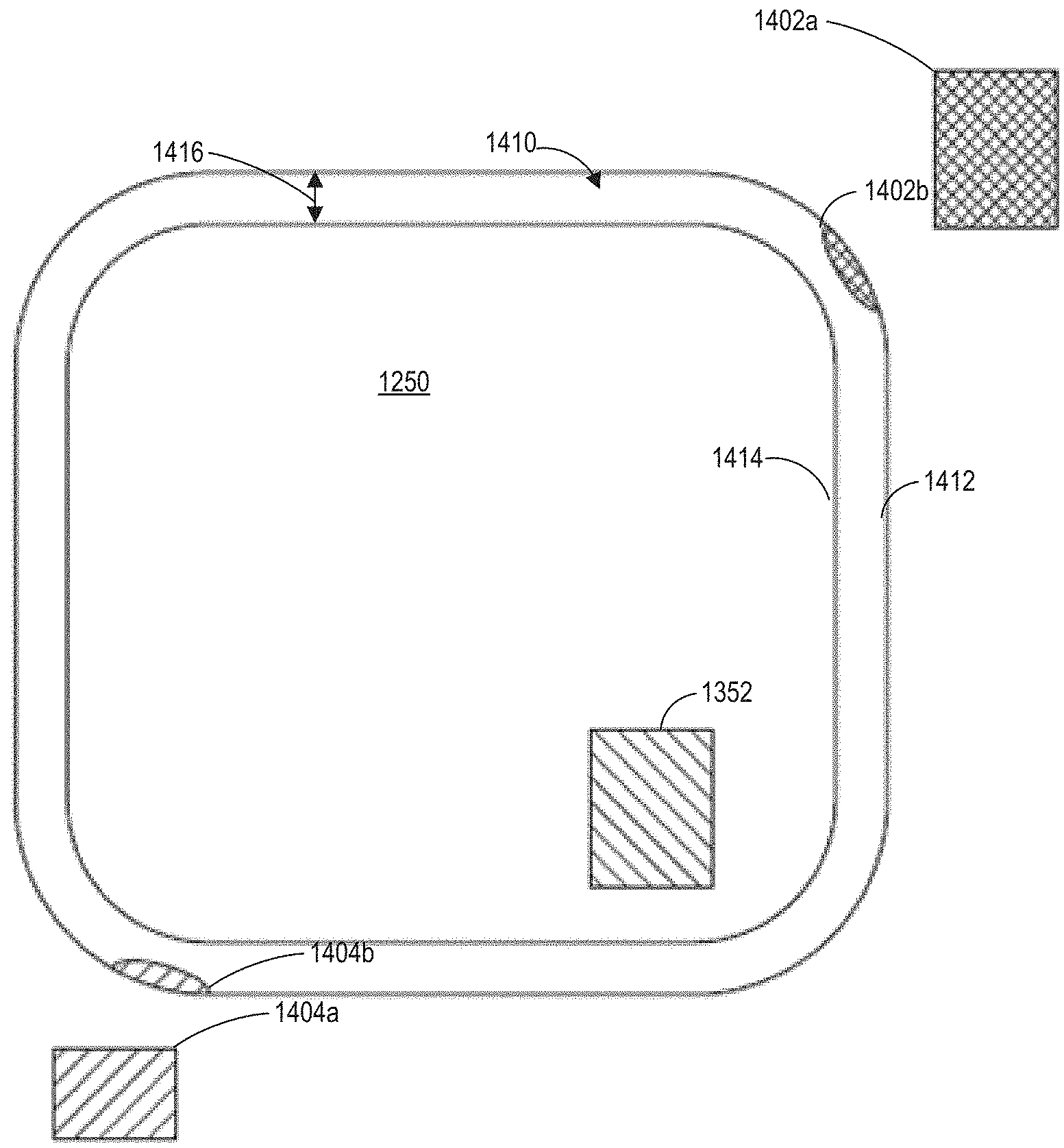

VISUAL AURA AROUND FIELD OF VIEW

Publication number: 20220244776 - Publication date: August 4, 2022

Abstract: A wearable device can have a field of view through which a user can perceive real or virtual objects. The device can display a visual aura representing contextual information associated with an object that is outside the user's field of view. The visual aura can be displayed near an edge of the field of view and can dynamically change as the contextual information associated with the object changes, e.g., the relative position of the object and the user (or the user's field of view) changes.

Inventors: Alysha Naples, Jonathan Lawrence Mann, Paul Armistead Hoover

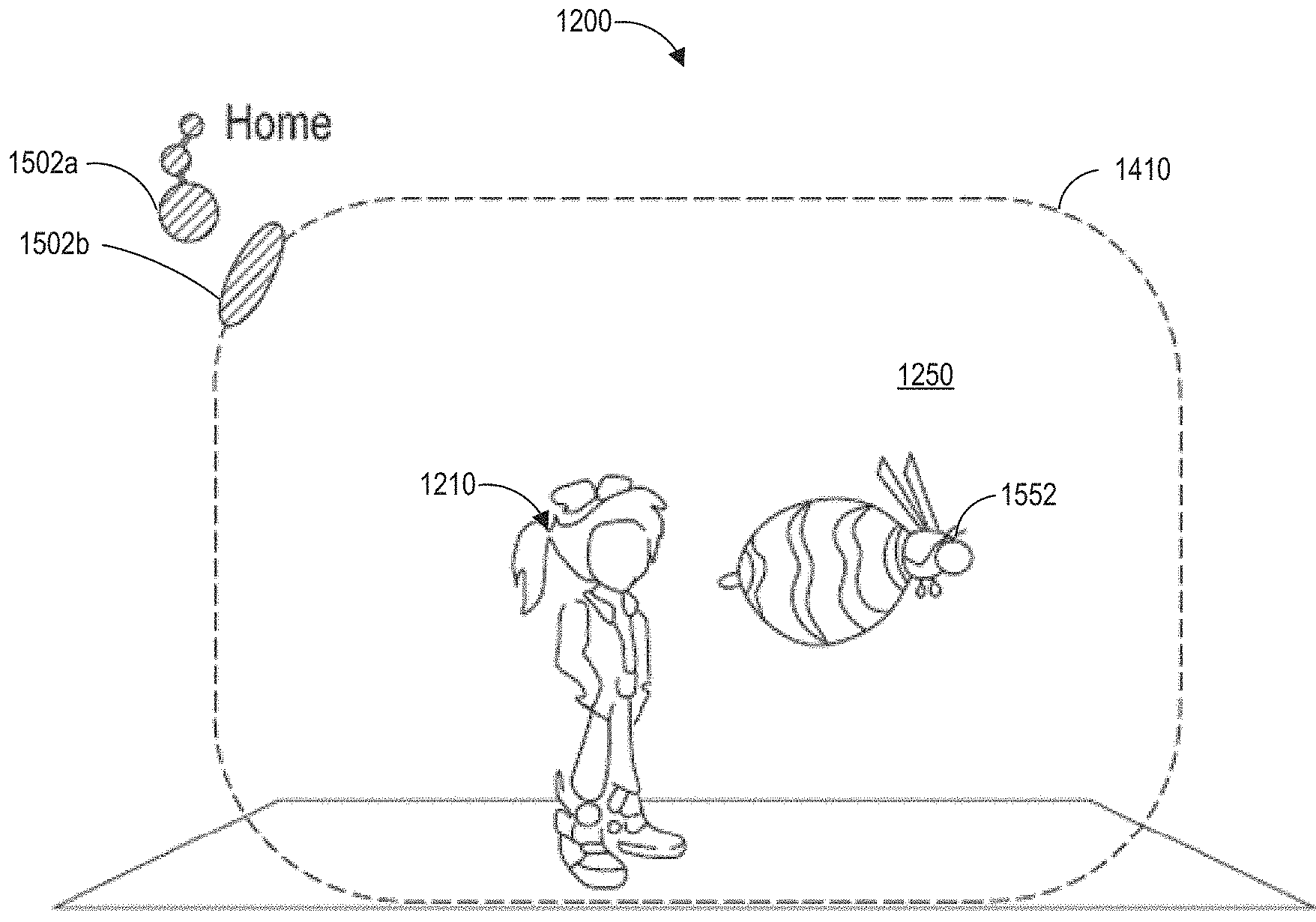

This was an early version of the FOV Aura. We ended up modifying this so that objects behind you wouldn't fill the entire border.

Because the Magic Leap field of view is smallish at 40x30 we needed a method to help users understand and find objects that were outside the field of view. The idea hit me on a plane on the way back from Florida. I got Cinema 4D out on my laptop and created a 3D structure that would fit around each of the user's eyes. Shown here are the top and front profiles.

This structure would catch virtual light projected from objects outside the FOV. Similar to the little bits of light you might see at the edge of your glasses if the light was shining on you. I modeled the shape so that it would only reflect light from virtual lights emanating from outside the FOV. Because each eye would see a slightly different reflection, I hoped that the user's brain could pinpoint the location in 3D space. When we implemented it in VR and then in AR and it worked nearly as expected. We found that the stereoscopic nature led to the aura being perceived in a particular depth in space, something I didn't want. To get around this we only showed the aura in the right eye when the objects were along the right edge of the display. Same for the left side. When implemented it felt like peripheral vision; something that you only see in one eye at a time.

We tested three different designs:

1. Colored dots and object around the edge of the FOV

2. Arrows around the edge of the FOV

3. Visual aura around the edge of the FOV.

2. Arrows around the edge of the FOV

3. Visual aura around the edge of the FOV.

In our tests the visual aura outperformed the colored dots and arrows by a factor at least 2x if memory serves. I'm not sure if this feature was ever implemented in the final platform, but I was proud of the work

There were a few other Magic Leap patents with my name on them. You can find the complete list here.

Paper Prototypes

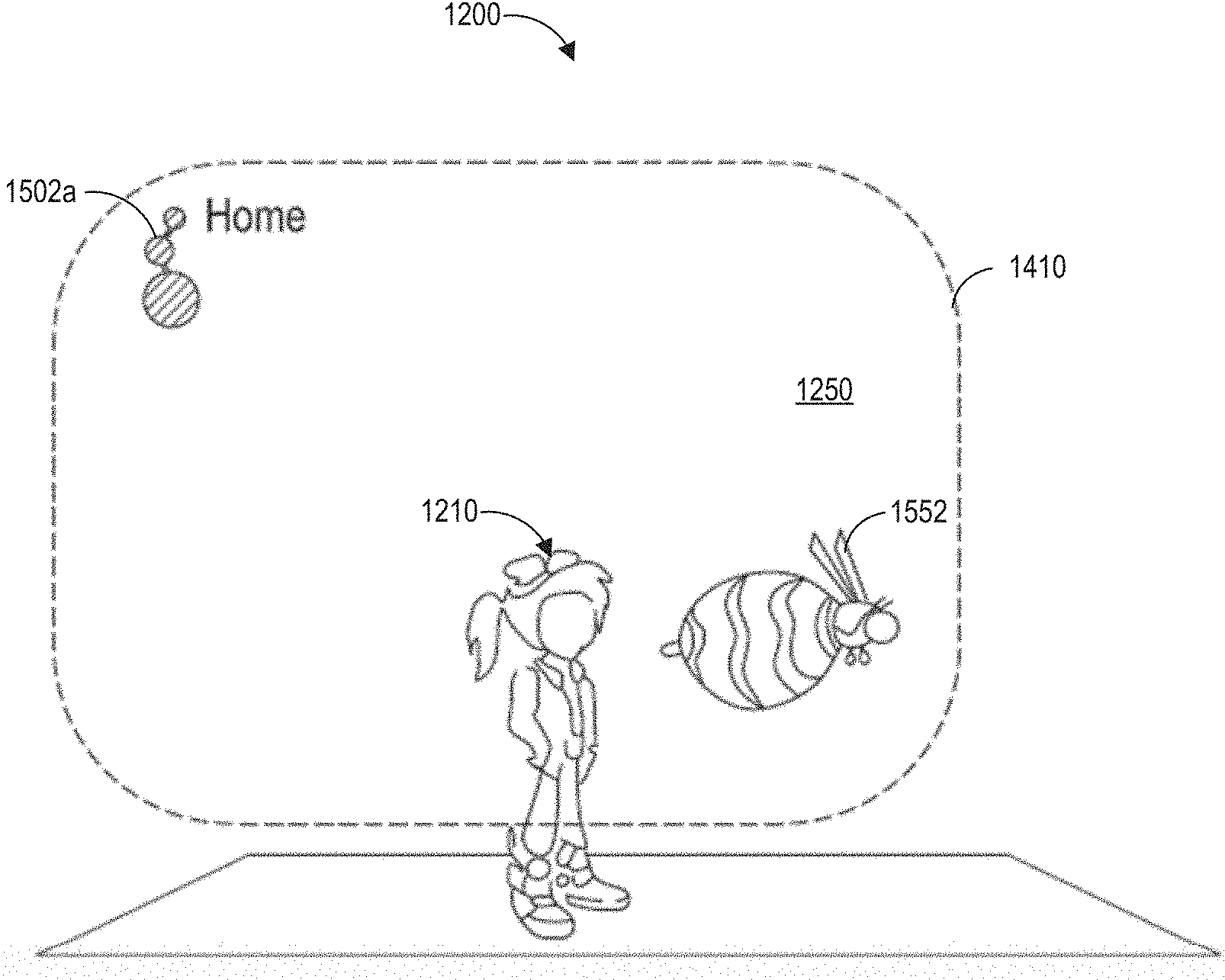

To kick off the engagement between Magic Leap and Artefact, we ran a two-day workshop to brainstorm metaphors and application experiences. On day two each of us took some time to visualize our ideal applications. I made this video in an hour or two using iMovie and Frameograph on my iPhone. The application would allow you to create and program creatures and hide them in your world. The input it uses is eye tracking, voice, and a 6DOF controller wand. Enjoy! This type of paper prototype was my shtick back in the day before we created our own design tool for the project.

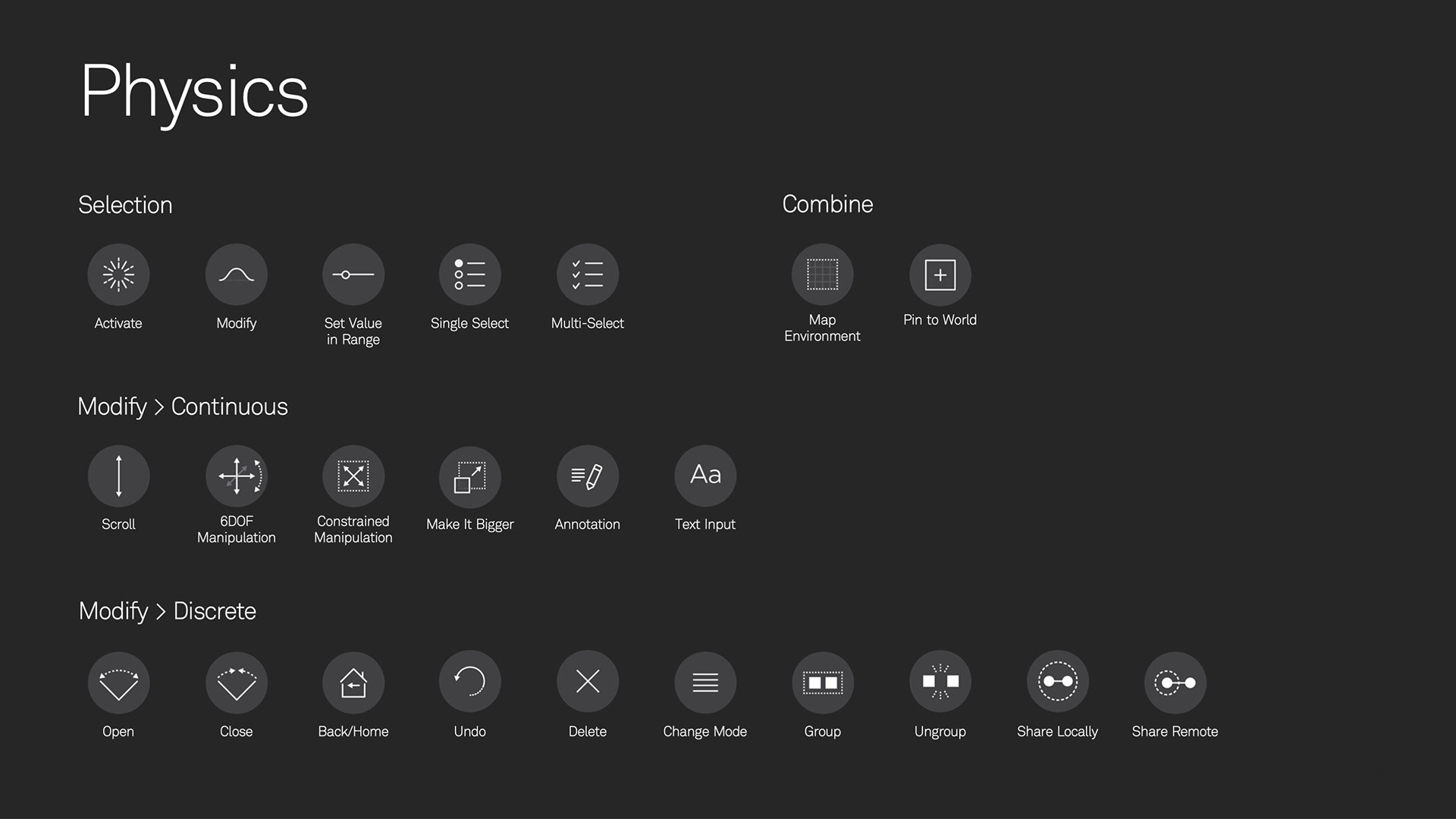

Near the end of our engagement with Magic Leap, I was asked to help define the gesture input language for the product. Somehow this is the only video I could find. This video shows some advanced gesture ideas for copying, pasting, and sharing digital files. Most of these concepts didn't make it into my final design but show some of my processes. The final design can be seen on the Magic Leap Developer website although the scrolling interaction was changed sometime before shipping. We had a method for scrolling that was less prone to accidental activation than hovering on the edge of the scroll pane. I'm not sure why the team changed course.

Advanced Gesture Concept Video

Storyboard AR

After the first year of working on Magic Leap at Artefact I was frustrated at the speed we were going. With no VR or AR native design tools, it was still too hard to design and iterate the UX design for the product. The HTC VIVE had just come out and I saw an opportunity to create a design tool that would accelerate our design throughput. I pitched to Artefact and Magic Leap that we take a 2-week break and create an MVP for what would become Storyboard VR. We went for it and came out of our sprint with a working tool that we could import sphere maps and transparent PNGs into storyboard frames in VR.

After we got the main features implemented, we created a unique AR flavor of the tool. One where you could set some content to an AR layer and others to a real-world layer. We made an accurate shader that overlaid the AR content onto the "real-world" background and cropped it to the 40x30 FOV that was the plan of record for Magic Leap One. At the time, it was the only method to see any of our designs accurately represented before handing them off to prototyping and engineering. This tool became a useful way to quickly onboard designers who didn't have AR or VR backgrounds and grow the team.

The Path to Shipping

After year 2, we started getting real hardware to develop on. One of the applications we were designing was the Gallery. Shown below is a Unity prototype of the gallery application running on a pre-production Magic Leap 1. We had to work in an army tent inside a warehouse in the Magic Leap office in Seattle. I'd use this time to find iterate on visuals with Sam Baker, prototyper and game dev master. I wish I had snagged more of my work from Magic Leap when I left Artefact, but alas, most of it has gone to digital rot.

The gallery ended up looking nearly to spec in the final product.

Congratulations, you've reached the bottom of this page! Read another case study below or go back and browse them all.